To begin understanding the technical side of Six Sigma, you have to first answer a seemingly straightforward question: What is quality? A traditional and widely held definition of quality is

Quality = compliance with specifications

The following mental experiment walks through the traditional definition of quality and highlights why measuring quality this way is a flawed approach.

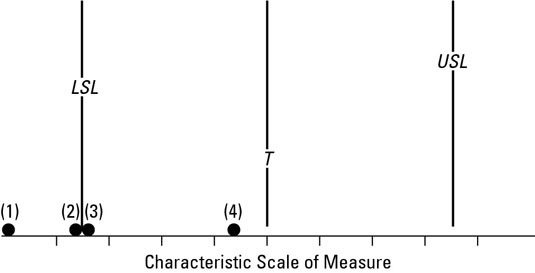

The horizontal axis represents the scale for the measurement for this critical characteristic. It may be the diameter of a part, the cycle-time of a process, or the cost of a service — whatever characteristic is critical to your customer. The target (T) and upper and lower specification limits are also shown for this characteristic.

Now imagine four parts that have come out of this process. You measure each one for how it performs on this critical customer characteristic. Part 1 measures far outside the allowed specification window. Part 2 is just outside the allowable window by the smallest margin possible. Part 3 is just inside the allowable window, also by the smallest of margins. Part 4 is nearly right at the ideal target value.

Deciding which of these four parts has the worst quality is pretty easy: Part 1 is clearly the worst. And picking the highest-quality part is easy, too; part 4 almost matches the ideal target value from the customer. But what about parts 2 and 3? Which of these two has higher quality?

Remember, part 2 is just outside the specification limit. Part 3 is just inside. Does that mean part 2 is “bad” and part 3 is “good”? Think of how these two parts will perform in the customer’s hands; will part 2 definitely fail before part 3? With only the slightest imaginable difference between parts 2 and 3, do you think the customer will perceive a difference in their performance?

The answer is no. From the customer’s perspective, parts 2 and 3 are the same.

A hard lesson

In the 1980s, Ford Motor Company had a wake-up call about its understanding of what quality is and isn’t. Back then, its Batavia, Michigan, plant religiously followed a quality policy based on the belief that compliance with customer specifications was what defined a high-quality product: Make the part in spec, and it would be “good”; make the part out of spec, and it would be “bad.”

Ford made sure that its operating policies and procedures were set up and that all its employees were trained so that nothing was allowed to advance in production or to leave the factory unless measured proof indicated that the characteristic was within the required limits.

At this same time, Ford was working with Mazda in a joint venture — both companies produced the exact same automatic transmission from the exact same blueprints with the exact same critical characteristics and specifications. Ford transmissions went into their Probe vehicles, while Mazda’s went into their MX-6s. You may remember these cars.

As both companies built transmissions and as reports started to come back from customers, Ford began to see a stark difference in the data — transmissions Ford built were several times more likely to have a problem and require warranty service than the same transmissions built by Mazda. How could this discrepancy be?

Ford had never worked harder to make sure every critical characteristic was within the required spec limits. It had strictly followed its quality procedures and had made sure that no parts exceeding the spec limits ever left the factory. Why, then, were Mazda’s transmissions so much more profitable?

Ford had to know why, so it acquired several transmission samples — some from its own factory and some from Mazda — and began tearing them apart and studying them to find the root cause.

First, Ford compared the transmissions for vibration. A good transmission with high reliability runs smoothly, with little vibration. In the tests, Mazda transmissions had vibration levels so much lower than Ford had ever seen that Ford first thought its vibration test equipment was broken! But the tests ended up being accurate; Mazda’s transmissions just ran that much more smoothly than Ford’s.

The folks at Ford also did dimensional checks of all the transmission’s critical parameters. What they found was that the distribution of measurements from the Ford samples did meet the goal of being within customer specifications, but the variation in the parts used up the entire width of the spec window, with just as many parts near the edges of the spec limit as were in the middle.

Mazda’s transmissions, on the other hand, showed a marked difference in consistency; all their measurements were lumped at the center of each spec, always very close to the ideal target value.

Ford quickly began to understand the reason for its lower profitability and performance. The quality it sought didn’t come from just getting in the spec window. Instead, Ford found that quality sprang from working to get all the parts to be on-target with minimal variation.

Ford’s chief of operations even published an internal video of the findings from the study. In it, he admitted that Ford’s quality policy and procedures had been “wrong” — that its very own policies had led it to the poor quality and business problems it was experiencing. This painful experience was one of the things that motivated Ford to change the way it thought of quality.

Contrary to the traditional definition, quality is not equivalent to a part’s or process’s compliance with specifications. A better, more realistic definition of quality is

Quality = on-target performance with as little variation as possible

This definition of quality is so important because, in reality, quality problems increase more and more the farther and farther that performance strays from the specified ideal target, even while performance is still within specifications. You can see that the target is the most important part of a specification. And getting a characteristic to operate on target with as little variation as possible should be your focus.