Autocorrelation, also known as serial correlation, may exist in a regression model when the order of the observations in the data is relevant or important. In other words, with time-series (and sometimes panel or logitudinal) data, autocorrelation is a concern.

Most of the CLRM assumptions that allow econometricians to prove the desirable properties of the OLS estimators (the Gauss-Markov theorem) directly involve characteristics of the error term. One of the CLRM assumptions deals with the relationship between values of the error term. Specifically, the CLRM assumes there’s no autocorrelation.

No autocorrelation refers to a situation in which no identifiable relationship exists between the values of the error term. Econometricians express no autocorrelation as

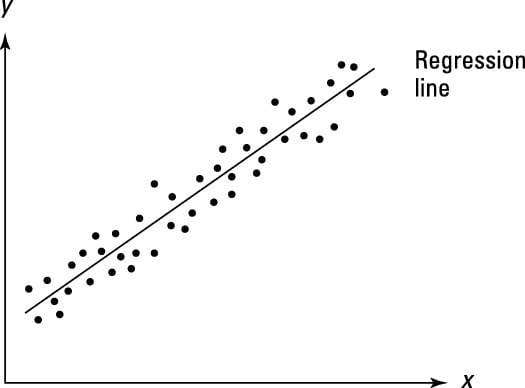

The figure shows the regression of a model satisfying the CLRM assumption of no autocorrelation. As you can see, when the error term exhibits no autocorrelation, the positive and negative error values are random.

When autocorrelation does occur, it takes either positive or negative form. Of course, autocorrelation can be incorrectly identified as well. The following sections explain how to distinguish between positive and negative correlation as well as how to avoid falsely stating that autocorrelation exists.

Positive versus negative autocorrelation

If autocorrelation is present, positive autocorrelation is the most likely outcome. Positive autocorrelation occurs when an error of a given sign tends to be followed by an error of the same sign. For example, positive errors are usually followed by positive errors, and negative errors are usually followed by negative errors.

Positive autocorrelation is expressed as

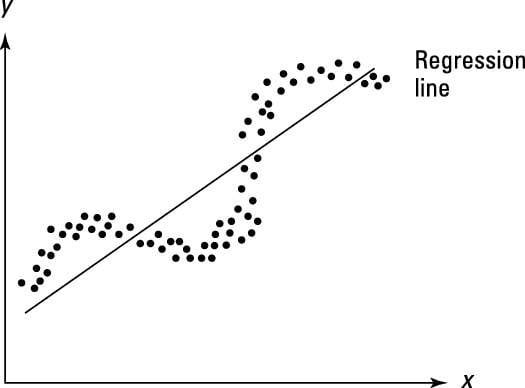

The positive autocorrelation depicted in the following figure is only one among several possible patterns. An error term with a sequencing of positive and negative error values usually indicates positive autocorrelation. Sequencing refers to a situation where most positive errors are followed or preceded by additional positive errors or when negative errors are followed or preceded by other negative errors.

Although unlikely, negative autocorrelation is also possible. Negative autocorrelation occurs when an error of a given sign tends to be followed by an error of the opposite sign. For instance, positive errors are usually followed by negative errors and negative errors are usually followed by positive errors.

Negative autocorrelation is expressed as

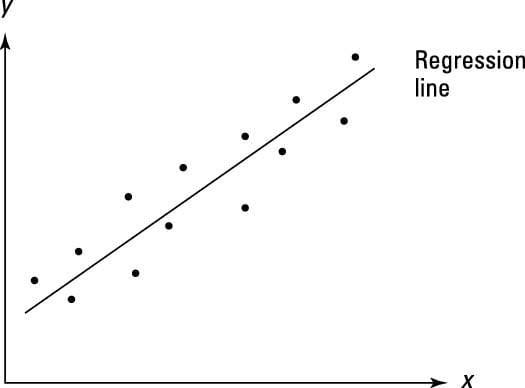

The following figure illustrates the typical pattern of negative autocorrelation. An error term with a switching of positive and negative error values usually indicates negative autocorrelation. A switching pattern is the opposite of sequencing, so most positive errors tend to be followed or preceded by negative errors and vice versa.

Whether you have positive or negative autocorrelation, in the presence of autocorrelation, the OLS estimators may not be efficient (that is, they may not achieve the smallest variance). In addition, the estimated standard errors of the coefficients are biased, which results in unreliable hypothesis tests (t-statistics). The OLS estimates, however, remain unbiased.

Misspecification and autocorrelation

When you’re drawing conclusions about autocorrelation using the error pattern, all other CLRM assumptions must hold, especially the assumption that the model is correctly specified. If a model isn’t correctly specified, you may mistakenly identify the model as suffering from autocorrelation.

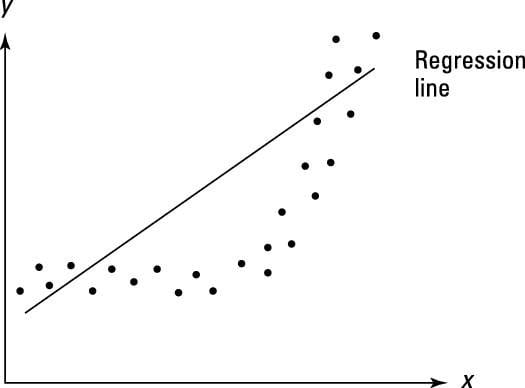

Take a look at the following figure, which illustrates a scenario where the model has been inappropriately specified as linear when the relationship is nonlinear. The misspecification shown here would end up producing an error pattern that resembles positive autocorrelation.

Perform misspecification checks if there’s evidence of autocorrelation and you’re uncertain about the accuracy of the specification. Misspecification is a more serious issue than autocorrelation because you can’t prove the OLS estimators to be unbiased if the model isn’t correctly specified.